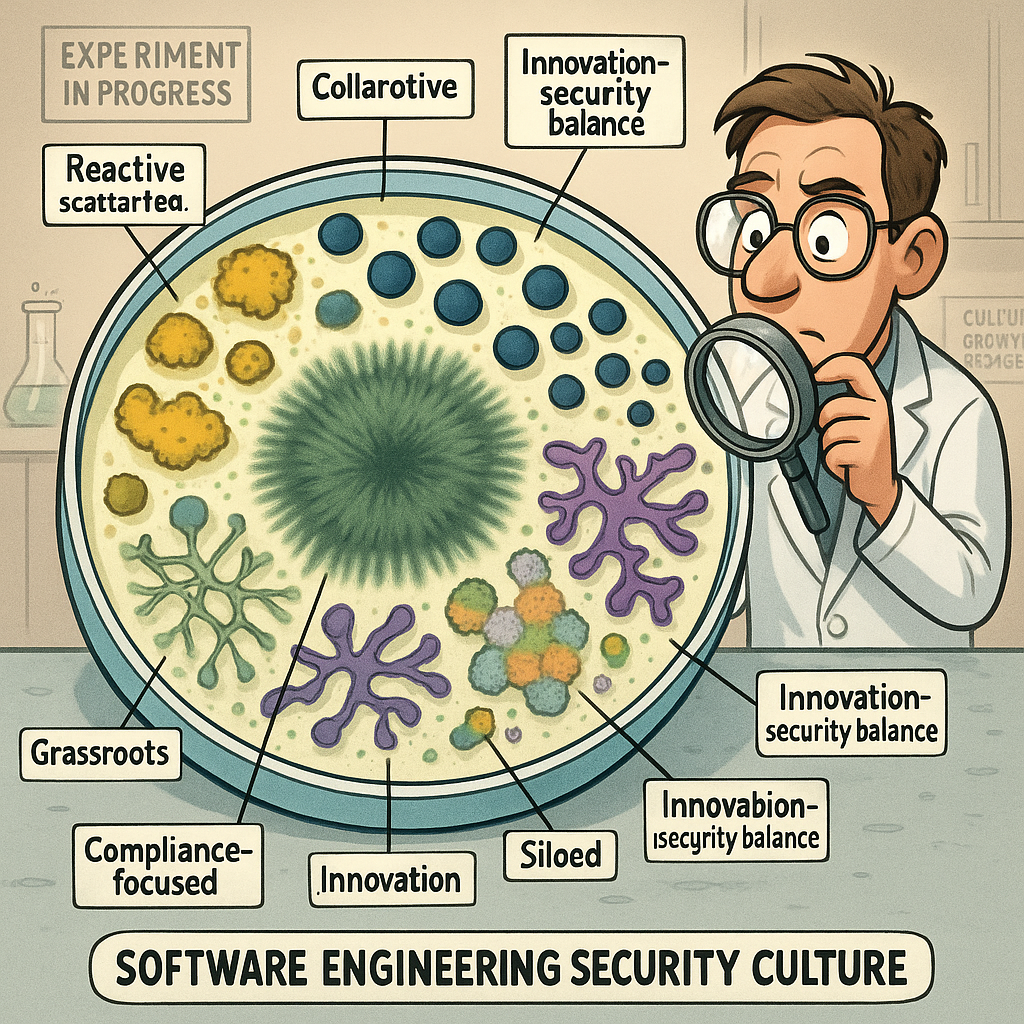

Software Engineering Security Culture

We need to fix the culture, from top to bottom in the software engineering industry. Here are just some of the issues as I see them and what we should be doing about them.

Software Engineering Culture

What we have here is an attitude problem.

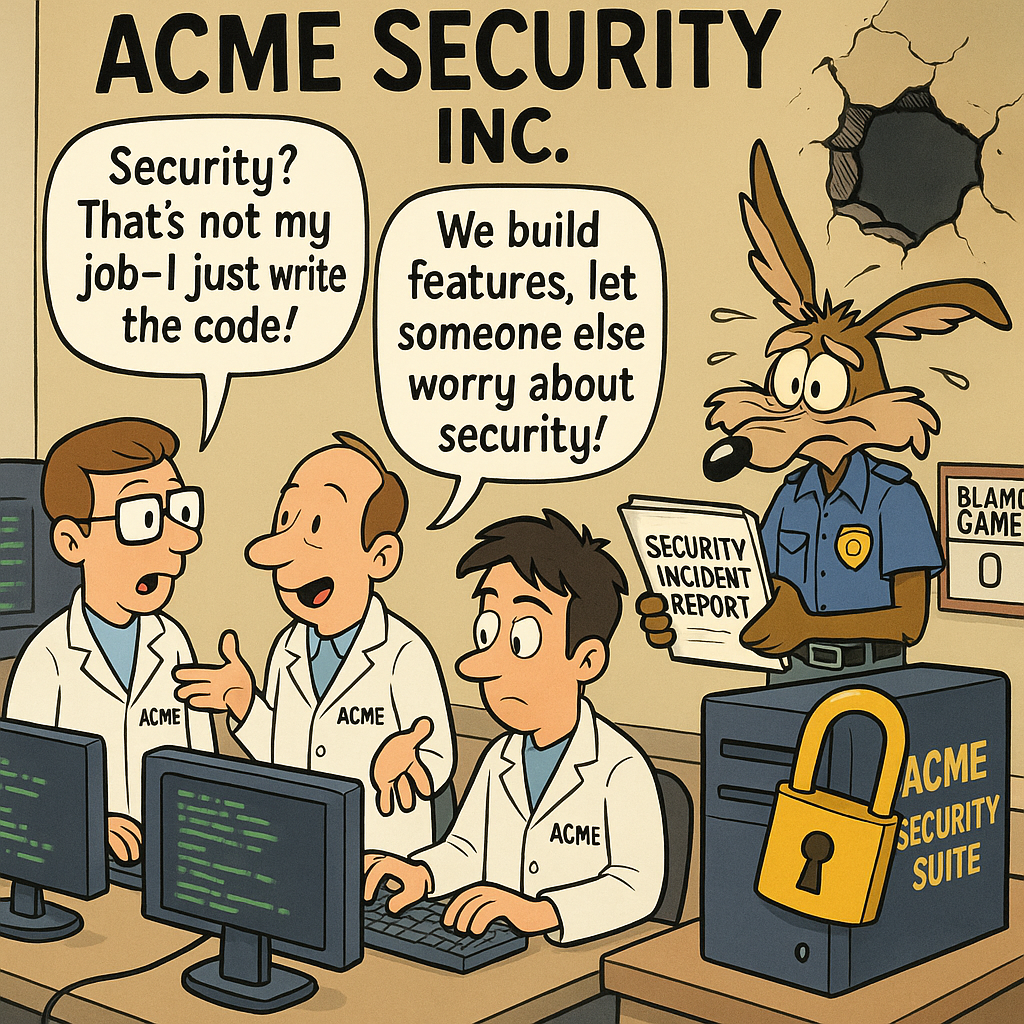

Rethinking Accountability in Software Security

As software engineers, we can no longer afford to say that security isn’t our job or claim that our sole focus should be on building features. My own background is in software engineering, and from the very beginning, I was taught to design systems holistically, factoring in both functional and non-functional requirements. So what changed along the way? The fundamentals haven’t shifted, building secure systems is part of our core mandate.

The reality is, expecting a single Application Security Engineer to review and remediate the work of 200 software engineers is neither sustainable nor fair. Security is a shared responsibility. It’s essential that we keep our own house in order, with the security team there to support and enable us, not to shoulder the entire burden or clean up after us. Accountability needs to be built into our processes, and software engineers should understand that negligence can result in real consequences for the company and for our colleagues.

That said, this isn’t about punishing honest mistakes. We all know that errors are part of the creative process, if you’re not making mistakes, you’re not pushing boundaries. The real expectation is that we put forth our best effort to ensure our code is as safe as possible. By fostering a culture of responsibility, we empower ourselves and each other to build not just innovative, but resilient and trustworthy software.

Embracing Proactive Security: Why Waiting Isn’t an Option

One comment I often hear during discussions around mitigation strategies or non-functional security requirements is: “But as far as I know, that other team isn’t doing it this way, so why should we?” Personally, I find this mindset incredibly frustrating.

If we know a certain approach is the strategic direction for the organization, wouldn’t it make sense to plan for it now, rather than delay and playing catch up later? It’s almost always more efficient, and cost-effective, to implement security requirements from the start, rather than retrofit them after the fact. Waiting often means extensive rework, more complicated changes, and ultimately, greater risk to the project.

I can’t help but wonder: am I missing something? To me, the logic is clear. Proactively aligning with our security strategy isn’t just about compliance, it’s about building better, more resilient systems and saving ourselves unnecessary headaches down the line or at least it should be.

Another frustration I often encounter during threat modeling sessions is when I highlight a threat related to an existing feature, only to hear, “That’s part of an existing feature, so it’s out of scope.” Whether this threat was previously overlooked or has only become relevant due to the changes being proposed, it’s concerning when teams aren’t willing to even entertain the idea of including it in the threat model, let alone discuss potential mitigation.

Ignoring these issues doesn’t make them go away, in fact it can introduce unnecessary risk into the system. Threat modeling should be a holistic exercise, not just a checkbox for new features. If a risk is identified, regardless of when or how it emerged, it deserves our attention and a thoughtful response. Isn’t that the whole point of threat modeling in the first place?

I’m not saying threat model the entire system if it has been threat modeled previously in it’s current state but at least look at the effects a new feature might have on existing functionality.

This attitude is like saying I’m going to put solar panels on the roof of a run down shack that won’t support the additional weight because the shack is still standing after 20 years with the same roof on it. A new feature may adversly impact a pre-existing feature.

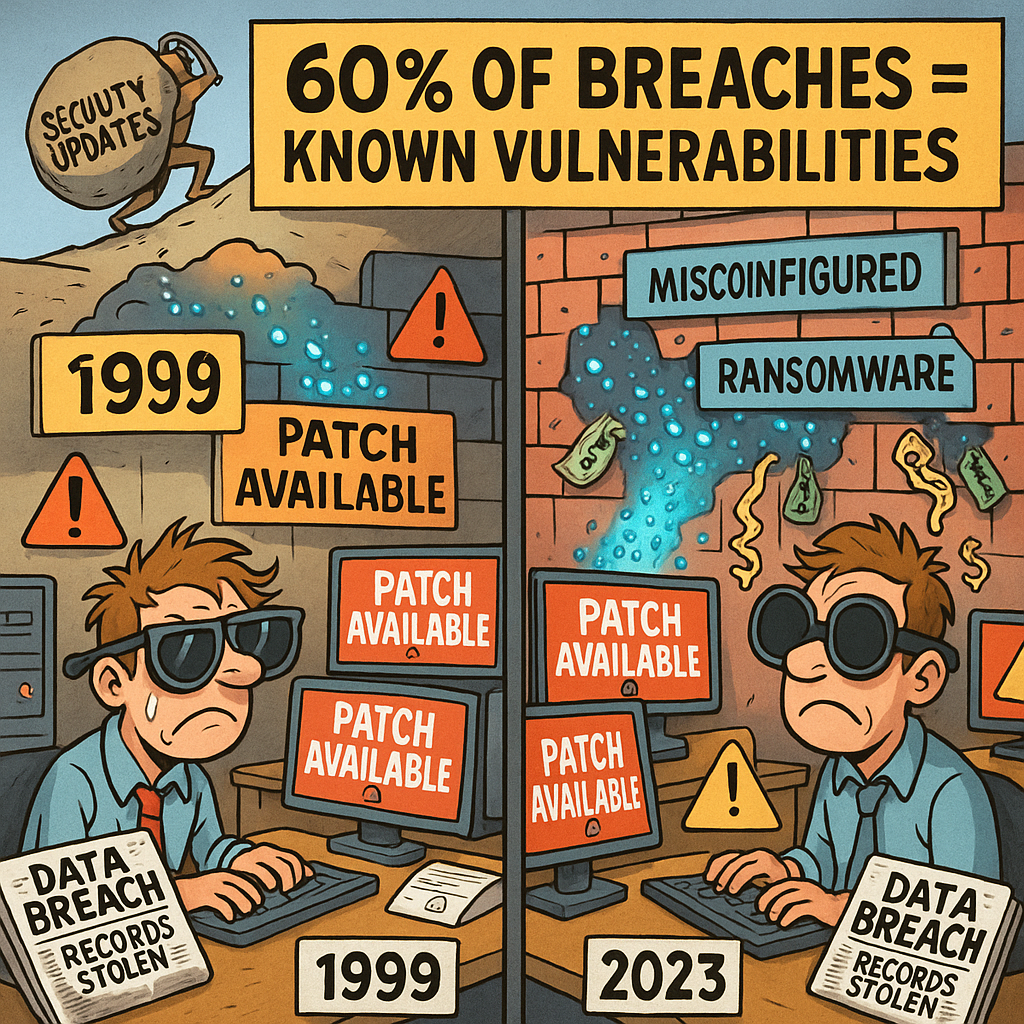

History repeating itself

We keep seeing the same errors being made time and time again, sometimes even by the same organisations. The incident hits the headlines and for a few months everyone is all over it and gradually the noise dies down and all is forgotten, and it’s back to business as usual.

Somehow we need to remember our metaphorical dead, maybe we should put monuments up after incidents so that people remember what happened.

Just get on with it and do your bit!

Earlier in my career, when my primary focus was on software engineering rather than security specifically, I always aimed to “keep a clean house.” As I navigated through the codebase, I would actively fix issues, secure components, optimize performance, and reduce cognitive complexity whenever possible. This mindset just seemed natural to me.

Interestingly, I’ve learned that this approach is quite common in Japan and known as Monozukuri, but apparently, it hasn’t taken root in much of the rest of the world. From what I’ve observed, relatively few software engineers routinely work this way.

This raises an important question: Why isn’t this standard practice everywhere? Is there something preventing us from embedding this mindset into development culture more broadly? I believe there’s a real opportunity to discuss how we can make continuous improvement and proactive code stewardship part of the global engineering ethos.

AppSec Engineers and Resources

We can’t do everything, help us help you!

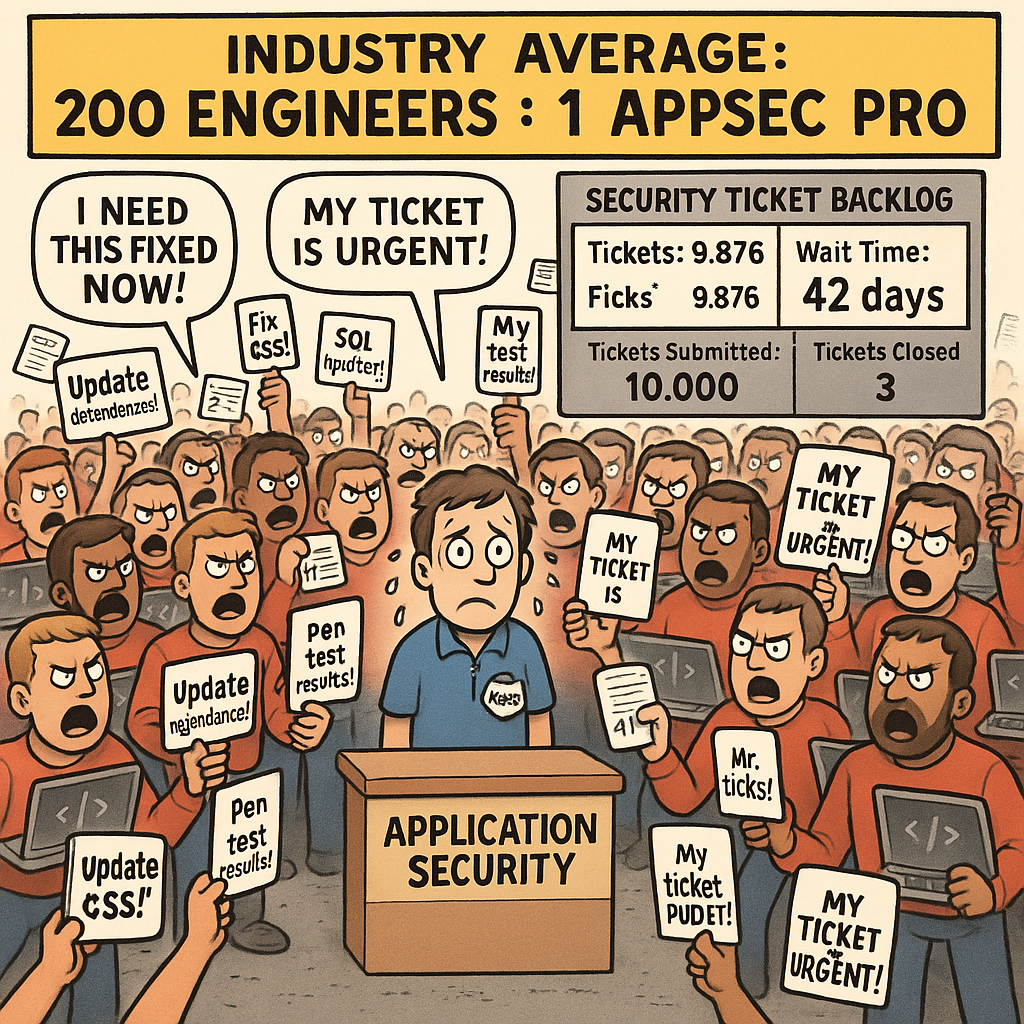

If the ratio of AppSec Engineers to Software Engineers is on average 1 to 200 then clearly without either:

- The help of the Software Engineers themselves and the collaboration of their management and product people

- The tooling necessary to automate and optimise

they’re clearly going to be overwhelmed and fiting a loosing battle.

Don’t expect miracles!

In AppSec we are so used to having to optimise our processes to scale without spending budget because we just don’t have any most of the time. The whole company from the top down need to embrace security and go the extra mile. We need people, tools and capacity from the Software Engineering team. If we actually get the people we need and the budget to purchase the tools, imagine what we might be capable of.

Imagine what we could achieve if we had support!

I’m not endorsing any of the tools in the above cartoon but they have been used as examples as they are well known names.

Can you imagine the reaction of your AppSec team if you were to give them all these tools though and how much load it would take off them?