Is Your AI Philosophy Broken?

AI-Generated Code: Productivity Gains, Security Pains, and the Real Cost of Vulnerabilities

Key Takeaway:

While AI coding tools promise impressive productivity gains, the data shows they also dramatically increase both the number and cost of software vulnerabilities. Without robust human oversight, your security backlog, and your remediation budget, could spiral out of control.

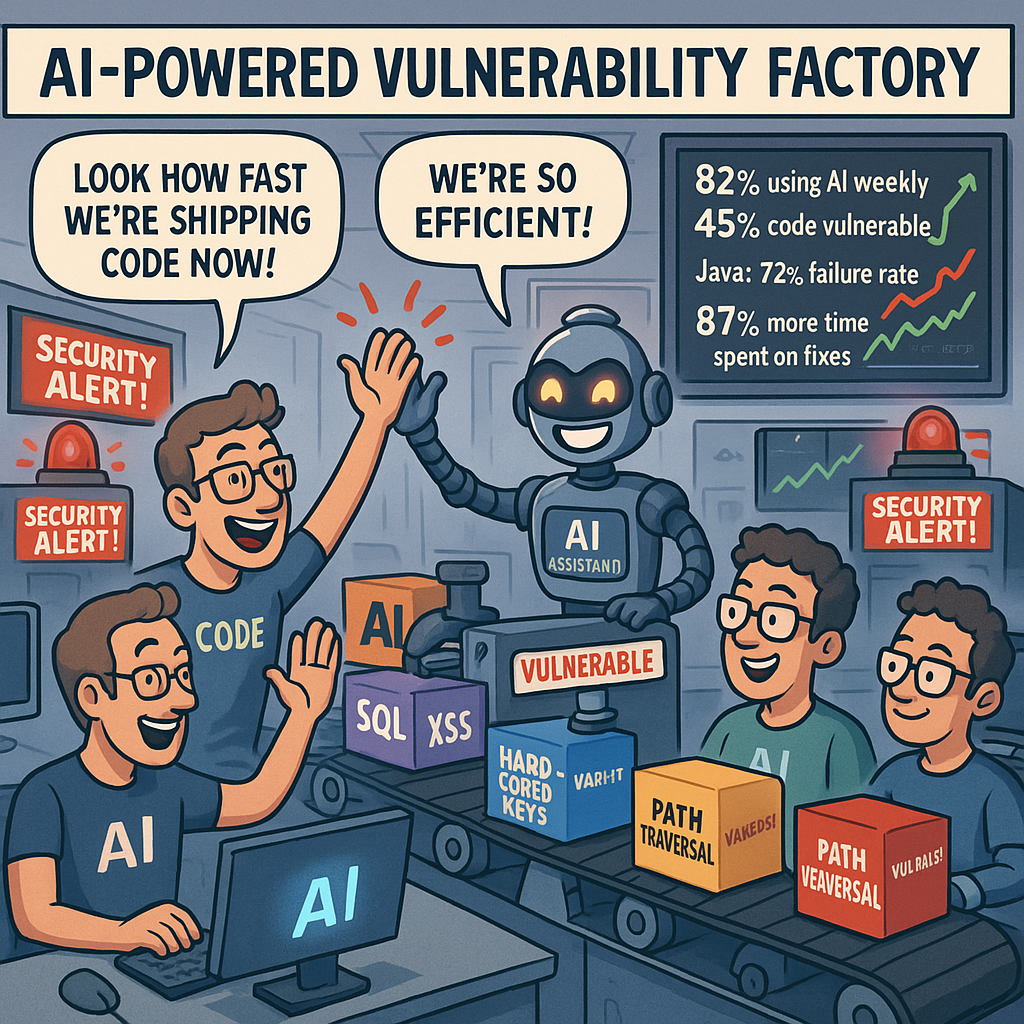

The Productivity Paradox: More Code, More Risk

There’s no denying the buzz around AI-powered coding assistants. Teams using these tools are, on average, producing 44% more code than their non-AI counterparts. Industry data shows that about 41% of the code in these AI-enabled teams is generated by AI, which means that, relative to a traditional team, AI is now responsible for producing roughly 59% as much code as a baseline team on its own.

But here’s where the productivity story takes a sharp turn. According to the latest Veracode report, only 55% of AI-generated code is free from vulnerabilities, compared to 75% for human-written code (though some sources now put the human figure closer to 55% as well) . Regardless, the volume of vulnerabilities being introduced each month has surged, from 2,079 in 2022 to an average of 3,465 per month today, a 66.7% increase.

Vulnerability Generation: The Hidden Efficiency

Let’s break down what this means in practice:

Baseline (Non-AI) Team:

- Produces 100 units of code

- 25% of that code contains vulnerabilities → 25 vulnerabilities

AI-Enabled Team:

- Produces 144 units of code (44% more)

- 59 units are AI-generated (41% of total)

- 45% of AI code is vulnerable; 25% of human code is vulnerable

- Total vulnerabilities: 47.8

That’s a 91% increase in vulnerabilities generated by the AI-enabled team compared to the baseline. So, while you’re shipping features faster, you’re also accelerating the creation of security debt at nearly double the previous rate.

| Metric | Baseline Team | AI-Enabled Team |

|---|---|---|

| Code units produced | 100 | 144 |

| Vulnerabilities generated | 25 | 47.8 |

| Vulnerability generation increase | — | +91% |

| Monthly vulnerabilities (2022 → 2025) | 2,079 | 3,465 |

| Monthly vulnerability increase | — | +66.7% |

Maintainability: Complexity and Cost

The story doesn’t end with just more vulnerabilities. The maintainability of AI-generated code is a growing concern. A recent study found that the average cyclomatic complexity of AI-written code is 5.0, 61% higher than the 3.1 average for human-written code. In plain terms, this means AI code is harder to understand, test, and fix.

This complexity translates directly into operational pain:

- Mean Time to Remediate: +60%

- Average Cost to Fix Vulnerabilities: +74.5%

When you combine the 91% increase in vulnerabilities with the 74.5% higher cost to fix each one, the total cost impact isn’t just additive, it’s multiplicative. The real-world result? A staggering 234% increase in the cost of fixing vulnerabilities in AI-enabled teams compared to traditional teams.

Visualizing the Impact

| Metric | Human/Baseline | AI/AI-Enabled Team |

|---|---|---|

| Vulnerability-free code rate | 75%* | 55% |

| Vulnerability rate | 25%* | 45% |

| Cyclomatic complexity | 3.1 | 5.0 |

| Vulnerability generation | 25 | 47.8 |

| Cost per vulnerability | - | +74.5% |

| Total cost impact | - | +234% |

*Note: Some recent reports put the human vulnerability-free rate at 55%, matching AI, but the trend of increased risk and cost with AI remains clear.

What Does This Mean for Your Team?

Key Finding:

AI-enabled teams are not just producing more code, they’re producing nearly twice as many vulnerabilities, and each one is significantly more expensive to fix.

Unless your organization has an exceptionally high risk appetite, and the increased output from AI is so valuable that it offsets these costs, you cannot afford to ignore these trends. The only sustainable path forward is to invest in skilled application security engineers and software developers who can ensure the quality and security of your codebase.

AI can be a powerful accelerator, but without robust human oversight and a strong security culture, it’s just as likely to accelerate you into a security crisis.

Final Thoughts

AI is transforming software development, but it’s not a silver bullet. If you want to harness its productivity benefits without drowning in vulnerabilities and remediation costs, you need to double down on secure coding practices, code review, and continuous security education. The future belongs to teams that can balance speed with safety.

Summary:

AI coding tools can supercharge productivity, but they also supercharge your vulnerability backlog and remediation costs. The solution isn’t to avoid AI, it’s to pair it with rigorous human oversight and a relentless focus on security.

We need to mentor the future generations.

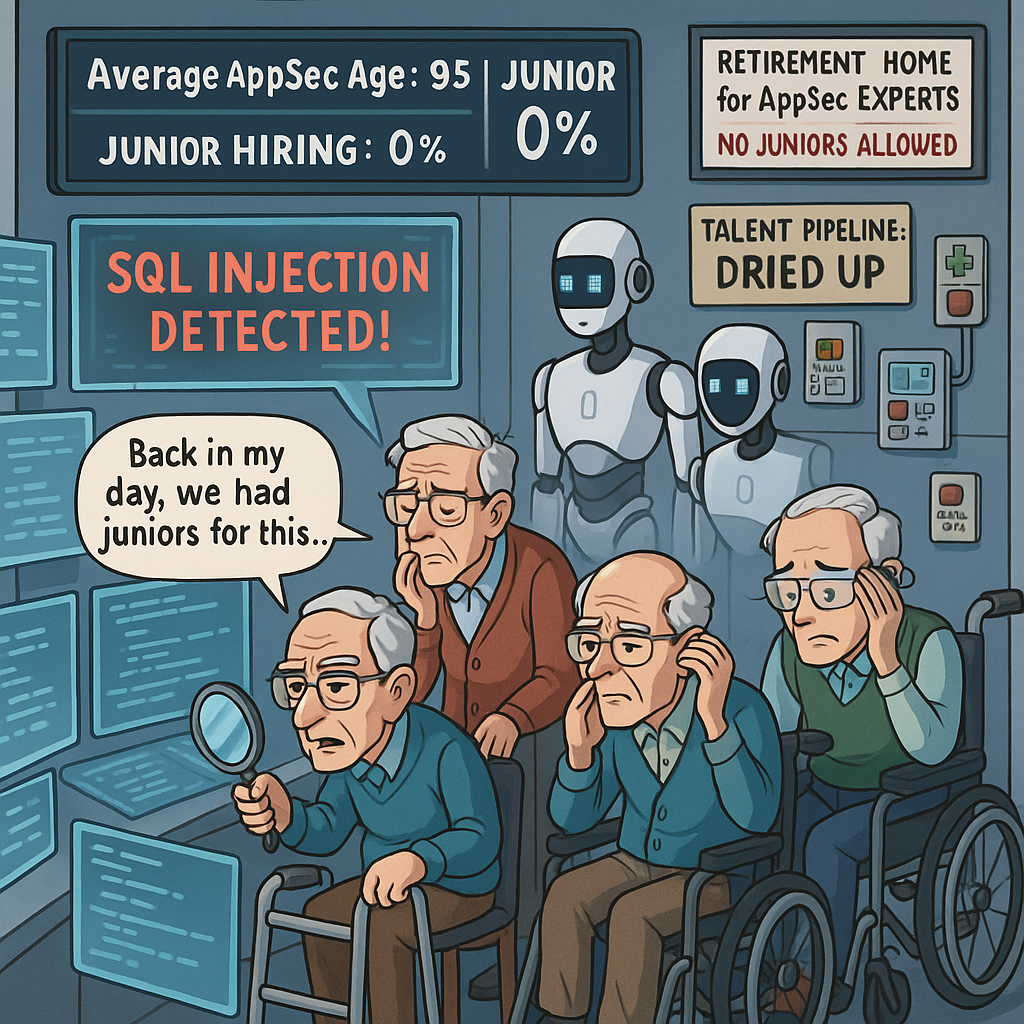

The Critical Need for Human Oversight in AI-Generated Code

Every piece of code generated by AI demands careful review and those tasked with this responsibility need to approach it with a critical eye. This isn’t a simple box-ticking exercise. Properly evaluating AI-generated code requires real experience, a strong technical foundation, and the ability to understand both the intent and the implementation behind the code.

Here’s where I see a serious problem emerging: if this level of expertise is essential, but we’re no longer hiring junior developers, or worse, we’re only hiring them to write prompts for systems they’ve never actually built, we’re setting ourselves up for trouble. Junior team members who lack hands-on experience with real-world software development are not equipped to craft meaningful prompts or to evaluate the output effectively. They simply don’t know what they’re asking for, let alone how to assess what the AI delivers.

This dynamic creates a worrying gap. We need to recognize that experienced engineers don’t appear out of thin air. They’re cultivated through mentorship, hands-on problem solving, and exposure to the full lifecycle of software development. The people who have the depth of experience needed to supervise and validate AI-generated code are becoming a dwindling resource. If we don’t invest in developing the next generation of engineers, by giving them opportunities to learn, build, and grow alongside more experienced colleagues, we won’t have anyone ready to step into those critical oversight roles as the current generation of senior engineers moves on.

If we want to maintain high standards in software quality and security, we need to build and nurture a pipeline of talent. That means mentorship, real coding experience, and a commitment to growing expertise, not just prompt engineering and not just outsourcing the heavy lifting to AI and hoping for the best. Otherwise, we risk leaving the future of our codebases in the hands of algorithms, with fewer and fewer people able to ensure that what’s being delivered is truly fit for purpose.

Where have your ethics gone?

AI, Layoffs, and the Question of Salary Dumping: What’s Really Happening?

Recently, we’ve witnessed several multinational corporations laying off large numbers of software engineers and other staff, attributing these sweeping cuts to the rise of AI. The narrative has been that AI can now fill these roles, eliminating the need for so many skilled professionals. Yet, just a few months later, many of these same companies quietly started rehiring, an admission, perhaps, that their initial assumptions were off the mark. Or, I can’t help but wonder, was something else at play?

What I’d really like to know is: were these returning employees rehired under the same working conditions, or did they come back to diminished terms? While I haven’t yet seen concrete data, my suspicion is that many were brought back on less favorable contracts.

This leads me to a theory: could this be a deliberate strategy, an endgame of salary dumping? Let’s look at the mechanics. By laying off a significant portion of their engineering workforce, these companies flooded the job market, driving down demand for engineers while dramatically increasing supply. Many laid-off individuals, faced with economic uncertainty, may have been more willing to accept lower salaries or reduced benefits just to get back into work.

It was always clear that AI would accelerate the more tedious aspects of software engineering, but I have yet to see it handle complex coding tasks without substantial oversight, hand-holding, and multiple review cycles. I’ve even had AI systems push back, admitting that certain problems I presented were too complicated. The idea that an entire engineering workforce could be replaced by AI, then, simply doesn’t hold water in practice.

Given all this, it’s hard to believe these layoffs were just a miscalculation about AI’s capabilities. It seems more plausible that we’re seeing a calculated move to reset salary expectations and employment conditions across the industry. If so, it’s a trend worth watching closely, and one we need to talk about openly.